Why DARPA wants more operational personnel

The Pentagon’s R&D arm has a lot of smart engineers thinking about the future—but maybe too many, according to one of its program leaders.

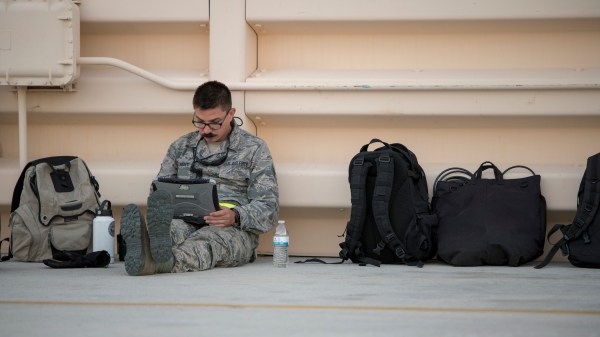

The Defense Advanced Research Projects Agency, perhaps best known for helping launch the internet, needs a cultural shift in order to develop projects for future warfighting, Lt. Col. Dan Javorsek, program manager of the Strategic Technology Office, said at a Noblis conference on automation Thursday.

DARPA needs a better balance of technical and operational team members, which would help it implement technologies more smoothly with an added focus on usability and product viability, Javorsek said.

Javorsek cited himself as an example of someone who has “feet in both worlds” with a technical background studying physics and applying it operationally as a fighter pilot.

This type of operational understanding can help DARPA develop better tech, he said, and it is especially pertinent in an age of algorithms and automation when things become less easily explainable.

“The challenge is how do we look at these new algorithmic technologies that do not have the explainability that we are traditionally used to,” he said of the emerging field of automated technologies like artificial intelligence.

When new technologies like AI-enabled warplanes or autonomous vehicles are tested, there is an uneven playing field for judging their trustworthiness as room for human error is built in, but the software must be perfect, Javorsek said. Hearing the term “human error” makes him cringe, not because it is inaccurate but because it represents an “asymmetric value system” that skews safety research to this thinking.

As a result, software gets an undue amount of criticism, he said — there is always the need to test a few more lines of code a few more times.

It’s about trust, he added. Instead of holding technologies to standards so strict they will inevitably lead to failure, it’s important to allow room for improvement in the technology by having a trustworthy system that can be easily improved.

“If we held that metric for human beings we would never allow anything,” he said. But with more operations and non-engineering specific people working to develop new tools of the future, Javorsek thinks that could change.